Rottentomatoes vs Metacritic

In my younger and more vulnerable years my advisor gave me some advice I’ve been turning over in my mind ever since. “Whenever you feel like using statistics,” he told me, “just remember the assumptions you make.”

He didn’t say any more, but we’ve always been unusually communicative in a reserved way, and I understood that he meant a great deal more than that. In consequence, I am inclined to approach any statistical method with a great deal of skeptical reservation.

Hence, in light of my interest in cinema, when years ago I first encountered websites that agglomerate movie reviews, I was excited yet cautious in my approach.

The two most popular sites that try to summarize the critics’ consensus are Rotten Tomatoes and Metacritic. Both show most critic reviews of a given movie. You can click and read the reviews themselves. But their main ambition is to summarize all this information in a meaningful number, so you can easily compare movies.

Rotten Tomatoes converts each review into a binary decision: fresh or rotten. Did the critic enjoy the movie or not? Or rather, would they suggest it or not? This brings the question of how to decide if a review is positive. Reviews with a rating greater than 60% of the available score range are called positive (2.5 stars out of 4 is fresh, for example). If there is no rating, human beings read and decide if the review was positive or negative. Combining all of these binary decisions from their list of approved (established) critics, we end up with one number: the percentage of positive reviews. Deemed “Tomatometer”, this is the metric that summarizes the consensus. (They also show an average rating in a tiny font size—clearly this is presented as a side information and not the main focus.)

Metacritic rescales each critic’s rating to the range of 0-100. For example, 2.5 stars out of 4 becomes 63. If there is no rating, human beings read and decide the approximate rating implied by the review (usually in increments of 10). The weighted average of these scores, called “Metascore”, is the one number that summarizes the critics’ feelings. The average is weighted in the sense that some critics’ opinions are treated as more important based on (a subjective idea of) quality, stature, prestige and respect. The scores are also normalized (as in grading a curve).

The 'Tomatometer’ can be thought of as the probability that a 'typical’ critic would think positively about the movie. The 'Metascore’ represents the expected rating that our 'typical’ critic would give the movie, if she was scoring on a scale of 0 to 100.

Before collapsing all into a single number, Metacritic keeps more information on each review. Their 'model’ of a single review (score of 0-100) is of a higher order than Rotten Tomatoes’ binary 'model’. This doesn’t necessarily mean it’s better, of course. Perhaps the only solid information that can be inferred from a critic’s rating is if they liked it or not, and the rest (how much they loved or hated it) is mostly noise. In other words, when asking a simpler question (does a typical critic like this or not?), you might have less of a chance to get it wrong. But let’s look at how critics grade movies.

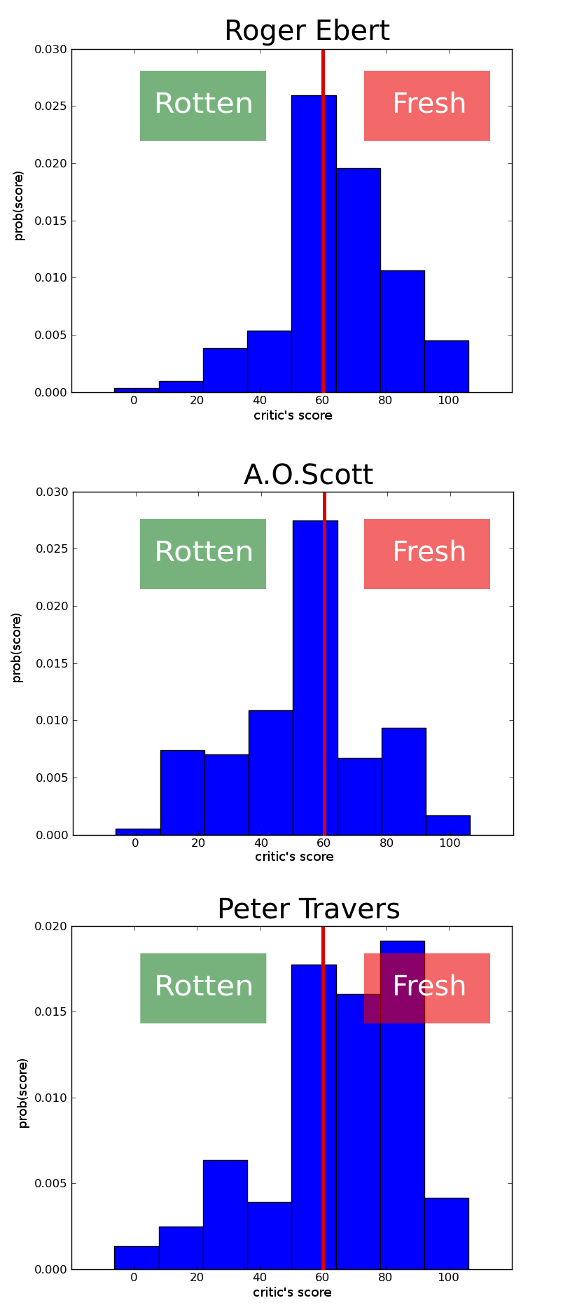

The three charts on the right with the blue bars show the rating distributions of three well-respected critics, Roger Ebert (Chicago Sun Times, 3944 reviews), A.O.Scott (The New York Times, 1212 reviews) and Peter Travers (Rolling Stone, 2043 reviews), rescaled to 0-100. I scraped the scores from metacritic.com. They are not altered in any other way than the straightforward rescaling. The red line indicates the 60% barrier that Rotten Tomatoes draws to divide between positive (fresh) and negative (rotten) reviews.

The first thing that jumps out is that the ratings are peaked, with extremely high and low scores appearing much less, which is not surprising. Another observation is that critics differ in where their distribution is centered. The mean scores are quite different for the three critics (Scott’s scores are balanced and centered in the middle, while Ebert and Travers tend to give higher scores more frequently).

Looking at these figures, some assumptions behind Rotten Tomatoes’ method seem pretty shaky to me:

a) “A critic’s reaction can be accurately summarized as positive or negative.”

b) “The rating gives a clear indication of if the critic liked it or not.”

These distributions are peaked right at the region where Rotten Tomatoes makes the distinction of fresh/rotten. So, not just a few, but a major portion of the movies are in that gray area, that exact border of “is it positive or negative”. Rotten Tomatoes is taking this peaked distribution, and modeling it with two bins: “Rotten” from 0 to 60, and “Fresh” from 60 to 100. The additional figure on the right shows how bad this binning looks like in the example of A.O. Scott. The peak of the distribution is gone in the two-bin model. The largest chunk of movies are right around the cutoff score of 60. Falling on either side of the 'fresh’ vs 'rotten’ divide is decided by very small differences. Also, since the mean scores differ from critic to critic, the meaning of the hard-set point at 60 will also differ among critics.

There is another, subtler point. The Tomatometer gives you a probability of liking the movie. What it implies is that if you go to a movie with a 80% rating, you will probably like it. If you see a movie with a 99% rating, again, you will probably like it. It absolutely does not imply that you will like the 99% movie more than the 80% movie. In fact, the opposite might occur very frequently, it follows directly from the assumptions, the question asked, and the methodology to answer it. (This wouldn’t be counted as an error. If you liked both, Tomatometer was correct—-it never said how much you’d like it.) However, often, users think exactly that: A 99% movie is “better’”, and they will like it “more”, since critics use ratings (as scores) generally in this second regard, and this is the frame that moviegoers are used to think in.

I believe that Metacritic’s approach to the problem of summarizing critic opinions is the better one. Naturally, a person’s reaction to a movie is very-high-dimensional information. Any attempt to collapse this into a single number is a huge, lossy compression. However, when on a rainy Friday afternoon you want to quickly decide what to see, I suggest checking out the Metascore instead of the Tomatometer.

As I sit here brooding on the subtleties of film rankings, it becomes abundantly clear that the perfect approach still eludes us. But that’s no matter—tomorrow we will run faster, stretch out our arms farther…. And then one fine morning—

So we beat on, boats against the current, borne back ceaselessly into the past.